What have we achieved when we talk about AI today?

Experts celebrate the progress of AI, but many wonder what we have really achieved and whether we can really talk about artificial intelligence.

Until recently, experts generally spoke of Machine Learning and Deep Learning applications. The generic term Artificial Intelligence, which refers to a sub-area of computer science, was rarely used. With the increasing popularity of applications such as Alexa and Siri, and then significantly accelerated by ChatGPT, public discourse changed the use of language. These applications are now referred to as Artificial Intelligences, whereby it is always implied that intelligent subjects are involved.

This view led to the expectation that, in order to justify the term AI, these intelligent agents had to be as intelligent as humans in a conscientious sense. Anything else would lead to disappointment.

But is this view justified? What are the actual goals of Artificial Intelligence (as a branch of computer science), and what has been achieved? In this article, I explore this question.

What was actually intended?

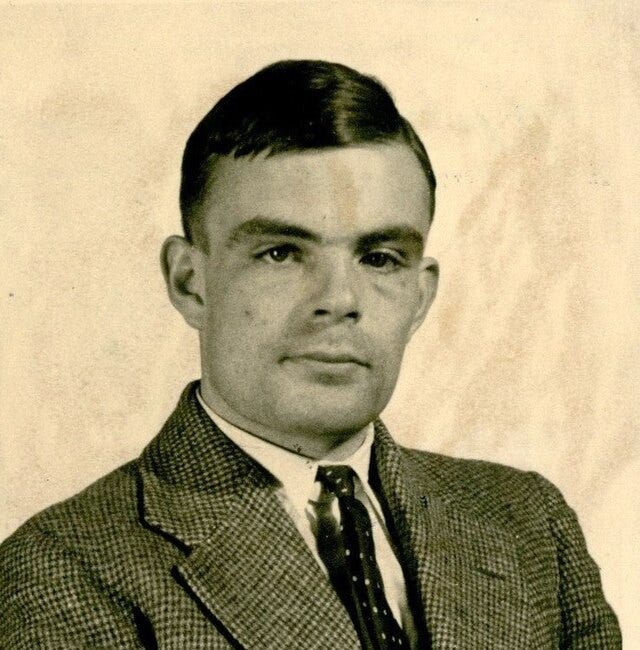

Alan Turing and Computing Machinery and Intelligence

In his seminal 1950 paper "Computing Machinery and Intelligence,"1 Alan Turing explored the potential of machines to exhibit intelligent behavior. He proposed the famous question, "Can machines think?" and introduced what is now known as the Turing Test, a criterion to determine if a machine can exhibit behavior indistinguishable from that of a human. Turing predicted that by the end of the 20th century, machines would be able to pass this test, thus demonstrating a form of intelligence2.

Turing discussed several key areas for AI development, including:

Learning Machines: He believed that machines could be programmed to learn from experience, improving their performance over time without human intervention.

Natural Language Processing: Turing anticipated that machines would be able to understand and generate human language, enabling meaningful conversation between humans and machines.

Abstract Reasoning and Problem-Solving: He suggested that machines could solve problems and perform tasks typically requiring human intelligence, such as playing chess.

Adaptation and Evolution: Turing speculated that machines might evolve through processes akin to natural selection, becoming more sophisticated over time.

Overall, Turing was optimistic about the future of AI, envisioning machines that could think, learn, and interact with humans in increasingly complex ways.

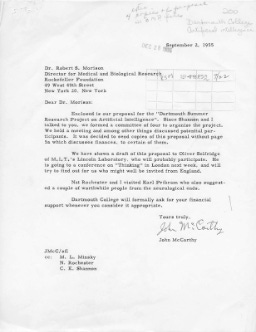

The establishment of Artificial Intelligence research

The objective of Artificial Intelligence research related to the Dartmouth Conference3, held in 1956, was to explore the potential for creating machines capable of simulating human intelligence. The conference aimed to gather leading researchers to discuss and develop methods for machines to perform tasks that would require intelligence if done by humans.

An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer. (Source)

This seminal event is considered the founding moment of artificial intelligence as a field of study, setting the stage for ongoing research into machine learning, reasoning, language processing, and other aspects of intelligent behavior in machines4.

The approach to AI established by the members of the Conference aimed to create machines capable of simulating human intelligence. The conference proposed exploring ways for machines to use language, form abstractions and concepts, solve problems typically reserved for humans, and improve themselves over time. This approach focused on symbolic reasoning and rule-based systems, where explicit instructions and logical rules were encoded to enable machines to perform tasks. This paradigm, known as Good Old-Fashioned AI (GOFAI), sought to replicate the cognitive processes of humans through formal symbolic manipulation, setting the foundational goals and aspirations for the field of artificial intelligence.

Early challenges and critics

Moravic’s paradox

Moravec's Paradox highlights that tasks humans find effortless, like sensory perception and motor skills, are incredibly complex and computationally demanding for AI, while tasks requiring abstract reasoning and logical computations, which humans find challenging, are relatively easier for AI. This paradox arises because sensorimotor skills have been refined over millions of years of evolution, making them highly efficient and intricate, whereas high-level reasoning is a more recent evolutionary development. Consequently, replicating basic human abilities such as seeing, moving, and interacting with the environment poses significant challenges for AI systems.

Hubert Dreyfus critic

Hubert Dreyfus was a prominent critic of artificial intelligence, particularly skeptical of its ability to replicate human intelligence. His critiques can be summarized as follows:

Symbolic AI Limitations: Dreyfus argued that symbolic AI, which relies on explicit rules and representations, cannot capture the nuances of human cognition. He believed that human intelligence is largely based on tacit knowledge and intuitive skills that cannot be formalized into rules or symbols.

Embodiment and Context: He emphasized the importance of the human body's role in shaping intelligence. Dreyfus argued that AI systems lack the embodied experience and situational context that are crucial for human understanding and interaction with the world.

Non-Algorithmic Nature of Human Intelligence: Dreyfus contended that human thought processes are not purely computational or algorithmic. He drew on philosophical insights, particularly from Martin Heidegger and Maurice Merleau-Ponty, to argue that human intelligence is fundamentally different from machine processing.

Overestimation of AI Potential: Dreyfus was critical of the optimistic predictions made by early AI researchers. He believed that the complexity of human cognition and the subtleties of everyday activities were vastly underestimated by AI proponents.

Overall, Dreyfus's critiques highlighted the philosophical and practical challenges of replicating human intelligence in machines, emphasizing the importance of context, embodiment, and the non-algorithmic nature of human thought.

GOFAI failed

"AI has been brain-dead since the 1970s," said AI guru Marvin Minsky (Source).

GOFAI, or "Good Old-Fashioned Artificial Intelligence," which dominated AI research from the 1950s to the 1980s, and relied on symbolic reasoning and rule-based systems to simulate human intelligence ultimately failed due to several key limitations:

Complexity and Scalability: GOFAI systems struggled with the vast complexity and variability of real-world problems. Hand-coding rules and symbols for every possible scenario proved impractical and unmanageable as problem spaces grew.

Ambiguity and Context: These systems had difficulty handling ambiguous or context-dependent information, which humans navigate easily. They lacked the ability to understand nuances and adapt to new, unforeseen situations.

Learning and Adaptation: GOFAI lacked mechanisms for learning from data and adapting over time. Unlike modern AI approaches, such as machine learning, GOFAI systems could not improve their performance through experience.

Due to these shortcomings, GOFAI was unable to achieve the general intelligence and flexibility that researchers had hoped for, leading to a shift towards data-driven and statistical methods in AI research.

Objectives of modern Artificial Intelligence

Russell and Norvig's textbook "Artificial Intelligence: A Modern Approach" is a widely used textbook for AI, providing a comprehensive overview of the field's history, key concepts, methodologies, and advancements. It reflects the failure of Good Old-Fashioned Artificial Intelligence (GOFAI) and discusses the shift towards statistical and learning-based approaches, such as machine learning and probabilistic reasoning, which have proven more effective in adapting to complexity and uncertainty. Through various case studies and examples, the book illustrates how modern AI methods have succeeded where GOFAI failed, marking a significant evolution in AI research.

According to the book the objective of AI is to build agents that can act intelligently. This involves designing systems that can perceive their environment, reason about what they perceive, make decisions, and take actions to achieve specific goals. The authors define AI in terms of the following four approaches:

Thinking Humanly: Creating machines that mimic human cognitive processes.

Thinking Rationally: Developing systems that use logical reasoning to solve problems.

Acting Humanly: Building agents that behave like humans, exemplified by passing the Turing Test.

Acting Rationally: Constructing agents that act to achieve the best possible outcome, or rationally, given the information and resources available.

The overarching aim is to develop systems that can function autonomously and improve their performance over time through learning and adaptation.

Then it worked with neural networks

Neural networks have achieved several goals outlined in the Dartmouth project proposal by enabling machines to perform tasks requiring human-like intelligence. Unlike GOFAI, which relied on human-generated rules, neural networks use a machine learning approach where systems learn from data. This learning-based approach has led to significant advancements in areas such as understanding and processing natural language, recognizing patterns, making decisions, and solving complex problems. Neural networks train on vast amounts of data to identify underlying structures and relationships, allowing them to generalize from examples and improve performance over time, thus fulfilling the vision of creating intelligent systems as initially proposed at the Dartmouth conference.

The idea of learning machines was already discussed by Alan Turing in the 1950s, where he envisioned machines that could improve their performance through experience. Around the same time, cyberneticists invented neural networks like the perceptron, which was developed by Frank Rosenblatt in 1957. These early concepts and inventions laid the groundwork for modern machine learning approaches, demonstrating the potential for machines to learn from data rather than relying solely on predefined rules. This foundational work has significantly influenced the development and success of neural networks in achieving AI research goals.

What have we achieved when we talk about AI today?

Despite the failure of GOFAI, the challenges outlined by Moravec's Paradox, and Dreyfus's critique, AI has delivered impressive results since the Deep Learning revolution and the invention of foundation models. Turing's vision, as described in "Computing Machinery and Intelligence," proposed that machines could eventually think and learn like humans, using natural language and solving complex problems. The Dartmouth Conference further aimed to explore and develop methods for machines to perform tasks requiring human-like intelligence. Russell and Norvig's modern AI textbook emphasizes creating systems that can perceive, reason, learn, and act autonomously.

The advent of deep learning has significantly advanced AI capabilities. Neural networks now excel at tasks involving image and speech recognition, allowing computers to "see" and "hear," fulfilling part of the vision Turing and the Dartmouth Conference envisioned. Deep reinforcement learning has enabled machines to optimize actions in complex environments, achieving superhuman performance in games like Go, a major milestone in acting rationally and humanly. Large language models, such as GPT, have revolutionized natural language processing, enabling machines to understand and generate human language, achieving conversational abilities Turing imagined. These developments show that, while initial approaches in AI faced substantial obstacles, modern techniques have overcome many of these challenges, leading to systems that approach and sometimes surpass human performance in specific tasks, thus meeting many of the original objectives of AI research.

Seeing and hearing

AI can see and hear through the use of neural networks, which enable computers to interpret visual and auditory information. These systems are trained on annotated images and sounds, with descriptions ranging from simple labels to complex content details. By learning from this data, neural networks can generalize and accurately predict annotations for new images or sounds, such as classifying an image as a cat picture or identifying specific sounds. This capability allows AI to effectively perform tasks that require understanding and processing sensory data.

This may give the impression of understanding content from images and sounds, but in mathematical terms it is a calculation of a probability distribution over given5 possible content conditioned on an image or a sound.

Acting

AI can act using reinforcement learning, often combined with neural networks6, which allows computers to make decisions and perform actions. These systems are trained through interactions within an environment that provides rewards based on the outcomes of their actions. This training enables AI to learn and select appropriate actions in various situations, such as choosing the next move in a board game or controlling an autonomous car. By continually optimizing their actions to maximize rewards, AI systems can effectively navigate and respond to complex environments.

This may give the impression that agents based on artificial intelligence can act freely. In reality, however, a so-called optimal policy is determined, which describes the optimal choice of an action from a given set of actions for a given state. The environment is also already pre-structured by a set of state descriptions. This means that the AI-based agent can generate neither new state descriptions nor actions. In mathematical terms it is a calculation of a probability distribution for a policy over given possible actions conditioned on a state.

Understanding and generating human language

Again, this might give the impression that these agents actually understand what we say and what they themselves mean when they speak. In reality, however, only the next part of a text is produced, taking into account the previous course of the conversation7.

In mathematical terms, this is a calculation of a conditional probability distribution over the next part of the text, taking into account the previous course of the conversation.

Shortcomings

Despite significant advancements in AI, particularly through deep learning and foundation models, substantial progress in real-world physical interactions and dexterity remains elusive, especially in the field of robotics. Robotics faces considerable challenges in replicating the nuanced and adaptive motor skills that humans and animals perform effortlessly. Tasks such as manipulating objects, navigating through unstructured environments, and performing delicate or complex physical operations require a level of sensorimotor coordination and real-time adaptability that current AI and robotic systems struggle to achieve.

Moreover, AI is also challenged by screen understanding8, which involves comprehending and interacting with visual information displayed on computer screens. Effective screen understanding would enable AI to seamlessly interact with a wide array of computer-mediated tasks, such as navigating user interfaces, reading and interpreting web pages, and managing software applications. This capability requires sophisticated perception, contextual understanding, and decision-making processes that are still underdeveloped. Consequently, while AI has made impressive strides in data analysis, language processing, and strategic game-playing, significant hurdles remain in achieving fluid physical interactions and comprehensive screen understanding for versatile real-world applications.

But is this really an artificial intelligence?

The question of whether modern AI, particularly large language models (LLMs), truly constitutes a kind of intelligence is complex and multifaceted. One core argument against considering these systems as truly intelligent is that predicting the next token in a sequence does not imply an understanding of what the AI is talking about. LLMs like GPT-4 operate based on patterns learned from vast datasets, using statistical correlations to generate coherent text. This process, while impressive, does not equate to comprehension. The models do not possess awareness, beliefs, or an understanding of the context in the way humans do; they merely manipulate symbols according to learned patterns.

However, despite this fundamental limitation, LLMs exhibit surprisingly rich capabilities that arise from this simple mechanism. They can generate text that seems contextually relevant, answer questions, translate languages, and even write creative content. These emergent capabilities9 demonstrate a level of functional competence that, while not indicative of true understanding, allows these models to perform tasks that were once considered exclusive to human intelligence. This functionality challenges our definitions and expectations of what constitutes intelligence, suggesting that even without genuine understanding, machines can produce outputs that mimic intelligent behavior effectively.

Yet, the sophistication of these outputs does not imply that computers are able to think as humans do. Human thinking involves reasoning, reflection, and a depth of understanding that goes beyond mere pattern recognition. It encompasses a sense of self, the ability to deliberate, and the capacity to understand abstract concepts and emotions. AI, in its current form, lacks these qualities. It does not reason about the world in a human-like way, nor does it possess the reflective consciousness that characterizes human thought.

Assessing whether these systems truly embody artificial intelligence remains a challenge. Despite their shortcomings, no better evaluation method has emerged than the Turing Test, which Alan Turing proposed in 1950. The Turing Test suggests that if a machine can engage in a conversation indistinguishable from that with a human, it could be considered intelligent. While this test focuses on behavior rather than understanding, it provides a practical benchmark for evaluating AI. As LLMs increasingly pass variations of the Turing Test, it prompts us to reconsider our criteria for intelligence and recognize the nuanced and layered nature of this evolving field.

What do we really know about human intelligence?

The discussion so far has often suggested that we have a solid grasp of how human intelligence works. However, can we truly speak with confidence about our capabilities, such as reasoning, understanding, and possessing true intelligence? Human cognition is a complex and often enigmatic phenomenon, and while we have made strides in understanding some aspects, many questions remain unanswered.

Introspection and reflection, long valued in philosophy, are inherently language-based and subjective. These methods of self-examination do not reveal the underlying mechanisms of our thought processes. They offer insights into the content of our thoughts and the way we experience them, but they fall short of explaining the cognitive and neural substrates that give rise to these experiences.

Modern science, particularly neuroscience, has further complicated our understanding of the autonomous subject central to Enlightenment thinking. Mechanistic views of the brain and experiments like those conducted by Benjamin Libet challenge the notion of free will by suggesting that decisions may be initiated subconsciously before becoming conscious. Theories such as Active Inference1011 and Predictive Coding12 propose that the brain functions primarily as a prediction machine, constantly generating and updating models of the world to minimize prediction errors. Anil Seth's research13 builds on this by suggesting that our conscious experience is a controlled hallucination, shaped by the brain's predictions and sensory inputs to create a coherent reality.

Further insights come from research with split-brain patients, individuals who have had the corpus callosum (the bundle of nerves connecting the two hemispheres of the brain) severed. Studies with these patients reveal that the two hemispheres can function independently and even possess separate streams of consciousness. This research challenges the notion of a unified self and suggests that what we consider a single, coherent consciousness may actually be a more fragmented and distributed process than previously thought.

These perspectives suggest that what we perceive as reasoning and understanding may be the product of complex, unconscious processes aimed at predicting and responding to our environment. This challenges the traditional view of the autonomous, rational human subject and suggests that our understanding of intelligence, both human and artificial, must account for these underlying mechanisms and the limitations they impose. As we advance in AI, these insights from neuroscience can help us refine our approach and deepen our understanding of what it means to think and understand.

Is disappointment justified?

Putting it all together, AI has made remarkable strides: it can now see and hear, talk, and act. Despite these impressive capabilities, there are still significant limitations. Computers are not yet able to perform all the tasks humans can, particularly when it comes to real-world physical interactions and dexterity. This is compounded by the closed nature of AI models, which rely on predefined labels, states, and actions, restricting their ability to extend or adapt their understanding of reality. The embodiment, or the physical presence and interaction with the world, remains a critical challenge for developing truly autonomous intelligent agents.

Two questions arise regarding expectations for AI:

1. Are disappointed expectations justified given the goals and achievements so far?

2. Can the current neural network-based approach achieve these goals?

Regarding the first question, I tend to say that disappointment is not justified. Although the goals have not yet been fully realized, the progress made is more than impressive and will increasingly shape our daily lives. The achievements in AI are significant and transformative, showcasing capabilities that were once thought to be far in the future.

The second question is more challenging to answer. On one hand, we have seen that the current approach, based on neural networks, continues to produce increasingly impressive and, importantly, emergent capabilities in AI. These systems have demonstrated remarkable abilities in understanding and generating language, recognizing patterns, and making decisions.

On the other hand, it is unclear whether what seems to be missing in AI—reflection, understanding, and reasoning—actually exists in humans in the way we imagine. Some theories suggest that our brains might function like statistical models, as indicated by deterministic models of brain processes, which imply the absence of free will. This perspective is supported by findings in neuroscience, which increasingly challenge our traditional views on human cognition and autonomy.

In conclusion, while there are legitimate questions about whether AI can ever fully replicate human intelligence, the achievements so far are undeniably impressive. The ongoing advancements suggest that we may continue to see surprising and emergent capabilities from AI, even if the ultimate goal of replicating human-like understanding and reasoning remains elusive for now.

https://phil415.pbworks.com/f/TuringComputing.pdf

Some researchers evaluated GPT-4 in a public online Turing test. The best-performing GPT-4 prompt passed in 49.7% of games, outperforming ELIZA (22%) and GPT-3.5 (20%), but falling short of the baseline set by human participants (66%). For the paper see here: https://arxiv.org/abs/2310.20216

https://www.kaggle.com/discussions/general/246669

This is a simplified overview. In reality, there was other research into what we now call artificial intelligence both before and parallel to the Dartmouth Conference, particularly in the field of cybernetics. Ultimately, neural networks, and consequently deep learning, foundation models, and large language models (LLMs), emerged from cybernetic research, while the methods pursued by the Dartmouth Conference protagonists, now known as Good Old-Fashioned AI, failed. However, since the conference established the term Artificial Intelligence and explicitly laid out its objectives in its proposal, I have focused more on it here. This discussion does not cover the significance of cybernetics or the more complex history of AI, including the conflicts between cognitivists and connectionists.

The model can only assign probabilities to members of a given set of possible labels. The model cannot come up with any new labels. Basically, it cannot create new names for object classes.

This approach is called Deep Reinforcement Learning.

Technically speaking, sequences of tokens are processed. These are chunks of text that are generally smaller than words.

For a recent paper about the ability of LLMs to comprehend and interact effectively with user interface (UI) screens see here: https://arxiv.org/abs/2404.05719

Described and discussed in Emergent Abilities of Large Language Models: https://arxiv.org/abs/2206.07682

https://mitpress.mit.edu/9780262045353/active-inference/

https://www.penguin.co.uk/books/313594/the-experience-machine-by-clark-andy/9780141990583

https://global.oup.com/academic/product/surfing-uncertainty-9780190933210

https://www.anilseth.com/read/books/